How to build a Serverless Data Platform on AWS

With the growing popularity of Serverless, I wanted to explore how to to build a (Big) Data platform using Amazon’s serverless services. In this article, we will look into what is a data platform and the potential benefits of building a serverless data platform. We will also look at the architectures of some of the serverless data platforms being used in the industry.

What is a Data Platform?

Over the last decade, software applications have been generating more data than ever before. As computation and storage have become cheaper, it is now possible to process and analyze large amounts of data much faster and cheaper than before.

A data platform is generally made up of smaller services which help perform various functions such as:

- Data Ingestion: This involves collecting and ingesting the raw data from multiple sources such as databases, mobile devices, logs. Data ingestion layers are expected to work with both structured and unstructured data as well as real-time and batch data.

- Data Storage: A data platform requires a secure, scalable and reliable data store. This data store can be used to store both the raw as well as processed data.

- Data catalog (or metadata service): This service helps with the metadata for the data. Schema and location of the data ( or tables) are examples of metadata.

- Data transformation: In this stage, the data is transformed from its raw state into a format that can be consumed by downstream services.

- Data analysis & Querying: Once the data has been transformed, it can be used by various business intelligence tools or in a self-service manner by other people to derive insights from the data.

Some of the more common uses of a Data Platform are:

- Business intelligence: Building dashboards and reports which help answer questions like how a certain product might be doing. This is also sometimes referred to as “descriptive analytics”.

- Predictive analytics: Helps predict/estimate the probability of a given event. Examples include fraud detection or other machine learning models.

Things to consider when building a Data Platform

While building a data platform, some of the things to keep in mind are:

Workload patterns: Is the data platform going to be real-time streaming or batch or both? Is it going to serve short transactional requests or more complex and longer analytical queries?

Operational Complexity: How quickly can the different services be provisioned? How easy is it to debug any issues that might come up? Are the services managed?

Scale: Is it possible to start small and grow the platform over time? Would it require a re-design to do that?

Cost: What is the pricing model for the services? Do you need to pay for idle capacity? Is it possible to start small and grow the platform with usage?

Why build a serverless data platform

Serverless architectures benefit from significantly reduced operational costs and complexity and thus it is a good fit for building a data platform. It can reduce the amount of time it takes to spin up a fully functional data platform as compared to the traditional “big-data” platforms. Some of the other benefits of a serverless data platform are:

- The flexibility of data ingestion and consumption.

- Cheap & reliable storage

- Ability to support ad-hoc querying of data

Let’s take a look at some of the services that can be used to build a serverless data platform:

Data Ingestion

Kinesis: Kinesis makes it easy to collect, process and analyze real-time streaming data.

API Gateway: API Gateway makes it easy to create and maintain APIs. API Gateway integrates with other AWS services such as Kinesis and Lambda which makes it easier to ingest data from sources such as mobile apps etc.

Storage

S3: S3 is an object storage service. In S3, you only pay for the resources you use. S3 can be used to store and retrieve any amount of data, at any time. S3 can be used to store both the raw as well as the processed data.

Orchestration

Step Functions: Step Functions let you build workflows which can integrate multiple AWS services. E.g. Step Functions can be used to build ETL jobs for training machine learning models.

Data Transformation

AWS Glue: Glue is a fully managed ETL service that makes it easy to transform and load your data from S3 which can then be used by Athena, Glue uses Spark under the hood and the generated ETL code is customizable allowing flexibility including invoking of Lambda functions or other external services.

AWS Lambda: AWS Lambda lets you run any function without provisioning or managing any servers. These functions can be written in a bunch of different languages such as Python, Go, Java, JavaScript. These Lambda functions can be used to transform your raw data into the desired format. Amazon also handles any potential scaling requirements for the application.

Data Analysis

Athena: Athena makes it easy to analyze data in Amazon S3 using SQL. Amazon Athena charges based on the amount of data scanned by the query.

Real-world Architectures

Next, we will look at examples of various serverless architectures for building data platforms.

Building a serverless architecture for data collection with AWS Lambda

- Data collection pipeline using Kinesis, API Gateway, Lambda, S3, and SQS

- The data collection pipeline gets an event from multiple sources (frontend, backend).

- Transformation on the events is done using Lambda. Those events are then emitted to other downstream services.

- They use Kinesis streams to handle real-time events

Our data lake story: How Woot.com built a serverless data lake on AWS

Main architectural considerations were:

- Ease of use for their customers. End users have varying levels of technical experience and they wanted to make it easier for everybody to access the data.

- Minimal infrastructure maintenance. Only had one engineer so important to use services that had low operational overhead.

Based on their requirements, they used the following services:

- Amazon Kinesis Data Firehose for data ingestion

- Amazon S3 for data storage

- AWS Lambda and AWS Glue for data processing

- AWS Data Migration Service (AWS DMS) and AWS Glue for data migration

- AWS Glue for orchestration and metadata management

- Amazon Athena and Amazon QuickSight for querying and data visualization

How we built a big data platform on AWS for 100 users for under $2 a month

- They wanted to build a platform that allowed customers to access and visualize any of their data at any time of the day.

- Also wanted to build a machine learning pipeline on this data

While designing the system, they found that Redshift was too expensive for their use-case. Ended up leveraging Athena heavily. Tweaked the system based on the best practices (like compression) and were able to save a lot of money and time.

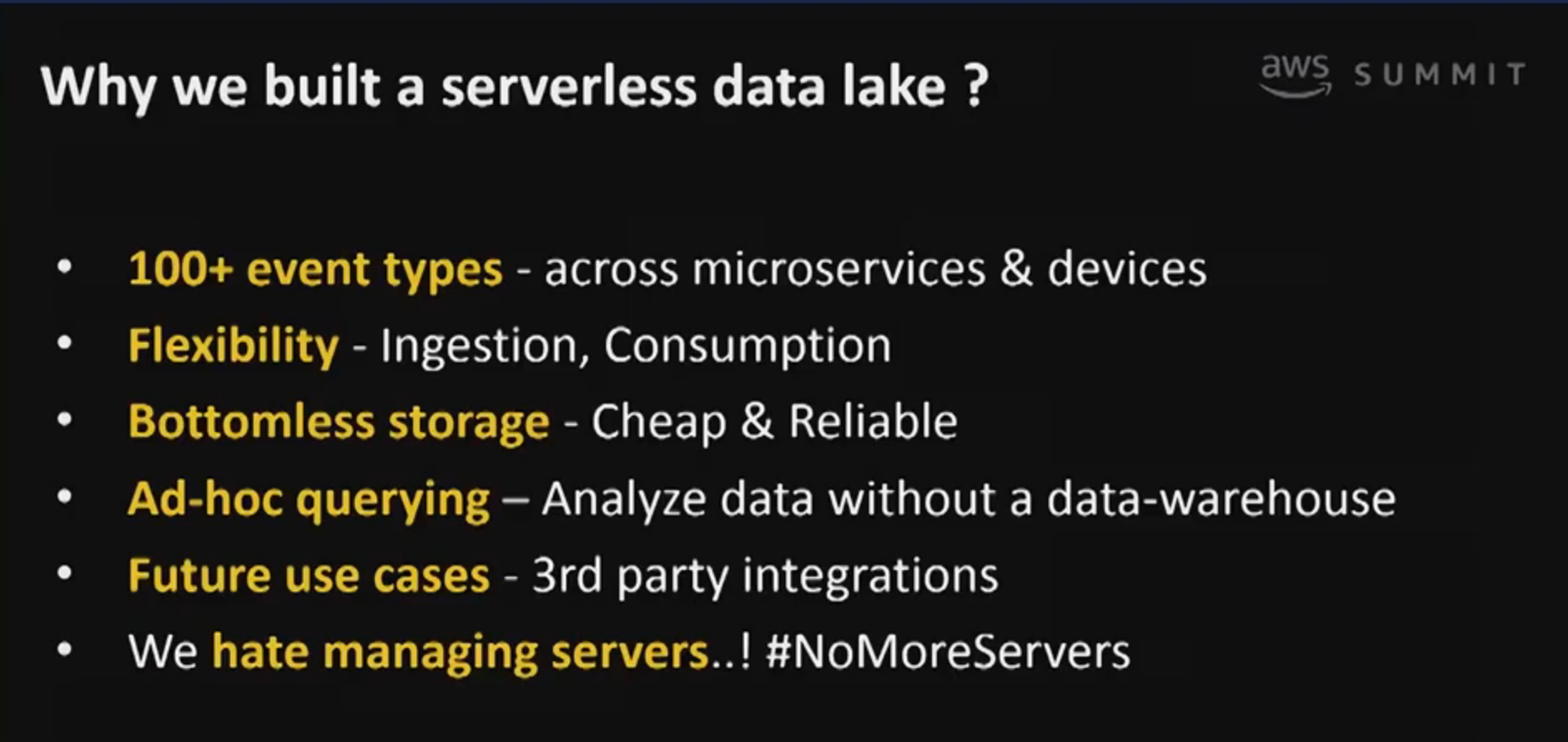

My Data Lake Story: How I Built a Serverless Data Lake on AWS

In this article, the author talks about why they built a serverless data lake and the resulting architecture for that:

- Amazon API Gateway, Amazon Kinesis Data Streams, and Amazon Kinesis Data Firehose for data ingestion

- Amazon S3 for data storage

- AWS Lambda for data processing and decorating

- Amazon Athena for querying

- Amazon CloudWatch Events for scheduling

- Amazon API Gateway for data visualization backend

This article is a practical example of how to build a data platform in the real world. These are the services they used to build their platform:

- Scrape data from craigslist.com using Python and Lambda

- Building and cataloging a mini data lake using AWS Glue

- Using Glue to transform JSON data to parquet format

- Query parquet data stored in S3 using standard SQL via Athena

- Visualize data in a dashboard using Amazon QuickSight

AWS Summit Singapore - Architecting a Serverless Data Lake on AWS

This talk covers the best practices for building a serverless data lake.

Conclusions

As serverless services become more powerful, we are going to see more and more conventional architectures migrating towards being more serverless. I believe for anybody looking to build a new data platform, starting with serverless might be a great choice.